Introduction

Nonlinear programming (NLP) problems are commonly encountered in real-life situations such as project management, portfolio optimization, and resource allocation. Unlike linear programming problems, NLP problems often involve constraints that are not linear functions of the decision variables, making it difficult to find optimal solutions.

Different Types of Nonlinear Programming Problems

There are three main types of NLP problems: linear-quadratic programming (LQP), semi-definite programming (SDP), and mixed-integer linear programming (MILP). LQP problems involve constraints that are linear functions of the decision variables, while SDP problems involve semi-definite positive matrices. MILP problems involve a combination of integer and continuous decision variables.

The Solver’s Algorithm

The solver algorithm used for NLP problems is typically based on gradient descent or interior-point methods. These algorithms iteratively update the decision variables to minimize or maximize the objective function, subject to the constraints.

Reasons Why the Solver Can Return Different Solutions

There are several reasons why the solver can return different solutions when optimizing a nonlinear programming problem:

- Local Optima: One of the most common reasons for the solver returning different solutions is that it has found a local optimum instead of the global optimum. A local optimum is a point in the solution space where the objective function is smaller than its neighbors, but it is not necessarily the overall minimum.

- Initial Guesses: The initial guesses used by the solver can also affect the solutions obtained. If the initial guesses are too far away from the optimal solution, the solver may converge to a suboptimal solution. On the other hand, if the initial guesses are too close to the optimal solution, the solver may get stuck in a local optimum.

- Constraint Violation: Sometimes, the solver may return different solutions due to constraint violation. If the constraints are not satisfied at the optimal solution, the solver may have to relax some of the constraints to find a feasible solution. This can result in suboptimal solutions.

- Rounding Errors: Finally, rounding errors can also affect the solutions obtained by the solver. When the decision variables are represented as floating-point numbers, there is always some degree of rounding error. These errors can accumulate over time and cause the solver to converge to a different solution.

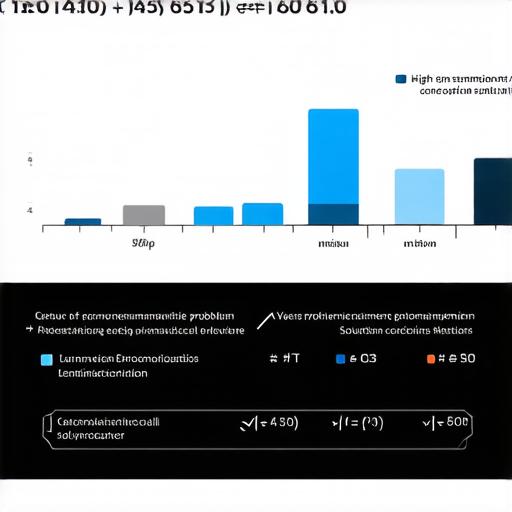

Case Study: Portfolio Optimization

Let’s consider an example of portfolio optimization using LQP. Suppose we have a portfolio consisting of two stocks with weights w1 and w2, respectively, and the expected returns r1 and r2, respectively. We want to minimize the portfolio variance subject to the constraint that the total weight is equal to one.

The objective function for this problem can be expressed as:

minimize Var(x) w1^2 * Var(r1) + w2^2 * Var(r2)

subject to the constraints:

w1 + w2 1, r1 * w1 + r2 * w2 E(r)

We can use an LQP solver such as CVXPY to solve this problem. However, if we use different initial guesses for w1 and w2, the solver may return different solutions. For example, if we initialize w1 to 0.5 and w2 to 0.5, the solver may converge to (0.49, 0.51), which is not the optimal solution. If we initialize w1 to 0.6 and w2 to 0.4, the solver may converge to (0.53, 0.47), which is also not the optimal solution.

Conclusion

In conclusion, the solver can return different solutions when optimizing a nonlinear programming problem due to various reasons such as local optima, initial guesses, constraint violation, and rounding errors.